Motive is the software package used to run our OptiTrack motion capture system. This guide explains how to set up and operate the system using Motive 2.3.1. By the end of this guide, you should know how to use the Motion Capture System to obtain ground truth data.

Prerequisites

Hardware

- Dedicated MSI Laptop (Windows 10, Motive 2.3.1 pre-installed)

- Motive License USB dongle (must be plugged into the MSI laptop for Motive to launch)

- Motion capture cameras (10 units, connected via PoE switch/modem)

- Calibration wand

- Calibration square

- Reflective markers

Network

- Dedicated modem/router for the motion capture system (isolated network)

- Ethernet cables for connecting cameras, modem, and laptop

Connecting the Isolated Network

Note: This step may not need to be repeated every time. If the motion capture network is already configured, skip to the next section.

Connect the motion capture modem/router to the ethernet wall ports.

Connect the MSI laptop to this modem via ethernet.

At the main lab modem, disconnect the black ethernet cable leading from the wall port, and connect the cream coloured cable to the wall port.

Next, unplug the green cable that connects the modem to the network hub, and connect the other end of the cream coloured cable in it's place.

Doing this temporarily removes ethernet access from the main lab network. Double-check that no one else is relying on the main network via ethernet before switching.

Once complete, the motion capture system is on its own isolated network. The MSI laptop and all cameras should now communicate exclusively through this network.

Configuring Motive

- Open the MSI laptop and boot into Windows 10.

- Insert the Motive USB license dongle (if not already inserted).

- Launch Motive 2.3.1 (allow a few seconds for the license to be detected).

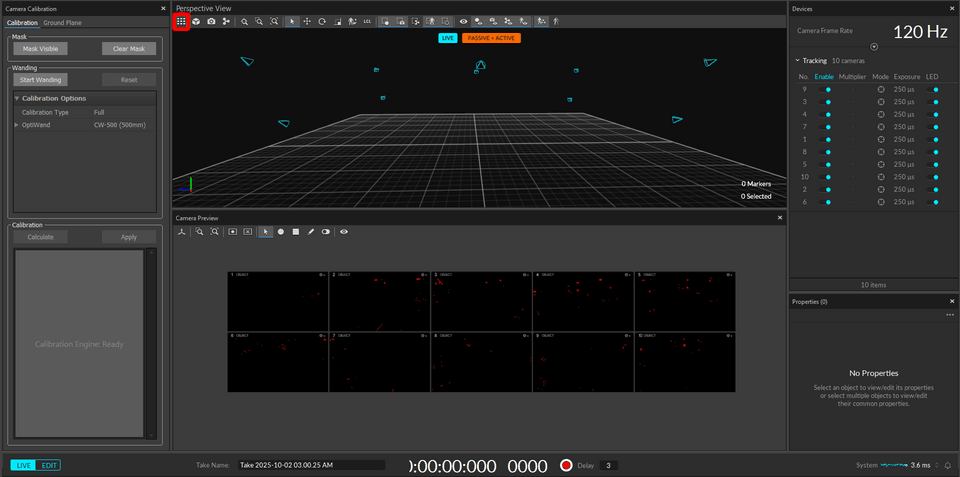

- In the Motive interface, check the camera list. You should see 10 connected cameras.

- If cameras are missing, revisit the Connecting the Isolated Network step.

The initial view of the software should look something like the figure below. You can toggle between camera view vs perspective view via the highlighted red box.

Camera Calibration

Before recording any datasets, the camera system must be calibrated to ensure accurate tracking.

Recalibrate whenever a camera is moved or bumped, even slightly.

Best practice: calibrate before every data recording session, as the process only takes a few minutes.

Clear the capture field of all objects.

In Motive, go to Camera View, and click Mask Visible.

- This masks out static background reflections.

Ensure no reflective markers you intend to use (e.g., calibration wand) are visible before applying the mask.

In Motive, select all 10 cameras in the camera pane and click Start Wanding.

- The 3D viewport will highlight red.

- A calibration table will appear, showing the number of samples collected per camera. Aim for roughly equal counts across all cameras.

Power on the calibration wand (switch on the back) once you are on the field.

Move the wand smoothly through the capture volume.

- Refer to this tutorial video (at 1:26) for demonstration.

Once all cameras have adequate coverage, stop the wanding process by powering off the wand before leaving the field. This ensures that the cameras don't track the wand outside our desired capture volume.

While you are performing the calibration, look at each camera and notice that when it detects the calibration wand, it will begin to glow a green ring around the LEDs. The completeness of this ring indicates how many unique samples the camera has recieved, which is important for calibration. This can be used as an indicator when to stop the calibration.

Click Calculate to finalise calibration.

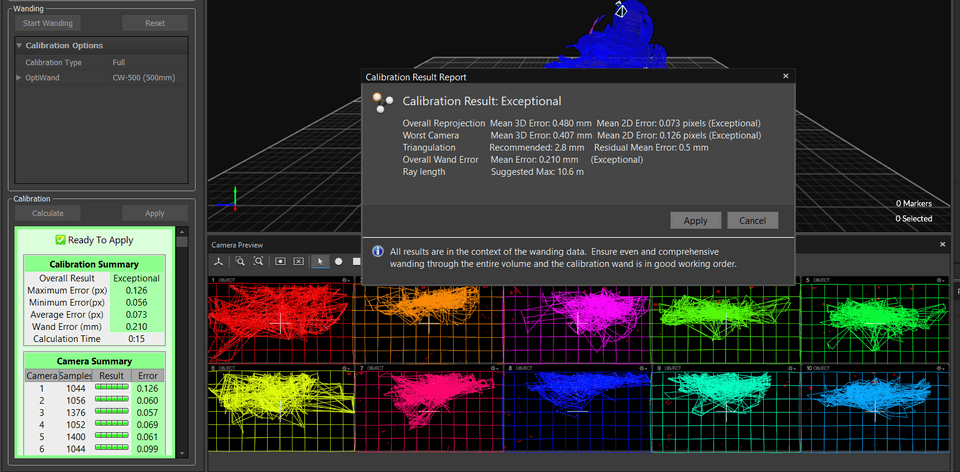

- Watch the result message carefully. Aim for Exceptional calibration quality (green status) as shown in the image below.

![Camera Calibration]()

Exceptional Result

- Watch the result message carefully. Aim for Exceptional calibration quality (green status) as shown in the image below.

If calibration quality is below Exceptional, repeat the wanding process with slower and wider coverage.

Setting The Ground Plane

Following Camera Calibration, you may notice that the 3D view of the cameras within Motive is skewed. This is because the system does not know where to reference our ground plane. To fix this, we must use the calibration square to set our ground plane.

Obtain the calibration square and place in the middle of the field, setting the Z direction to face the carpark 8 side of the ES building as shown in the below.

![Camera Calibration]()

Setting the Calibration Square Select Set Ground Plane. You will be prompted to save the calibration file. Ensure that the directory is

Desktop->calibrationand hit save. You should see the 3D view update so that the cameras are now referenced from this plane.

The calibration process is now complete.

Creating a Rigid Body

We are now ready to create our first rigid body. In our case, we can define a rigid body as a physical object with fixed markers whose relative distances remain constant, ensuring it doesn't change shape during movement.

We need to obtain a robot and some OptiTrack reflective markers to create a rigid body.

Place the reflective markers on the torso of the robot. When placing these markers it is recommended you place 4 on the front of the robot's torso, and two at the back. Ensure they are not symmetrically placed and are reasonably spread apart.

The torso is our key reference frame. We express the torso’s pose in the world as Htw (torso in the world frame).

By tracking the torso, we ensure all motion data is consistently expressed in the robot’s primary body frame.Place the robot in the centre of the field, and run

keyboardwalk.Once the robot is standing, we can create a rigid body in Motive. To do so, highlight the markers that are visible on the robot, right click, hover over

Rigid Bodyand selectCreate From Selected Markers.Because we don't have 'optimal' lighting conditions in the laboratory, you may notice some fake markers displayed in Motive. This may be caused by the reflections from the robot's metal components. To differentiate between markers that are real or fake, you should move the robot in a way that minimises the reflections, or until the fake markers disappear.

You have now succesfully created a rigid body. You can alter the settings of the rigid body by highlighting all the markers encompassing the rigid body and view the tab that appears in the bottom right of the screen labelled General Settings. Ensure that the Streaming ID is set to 1, and leave the others as default.

Recording a Ground Truth dataset

Before we can record our ground truth dataset, we must ensure that we are receiving messages from our Motion Capture system.

IP Configuration

We require the robot, our computer running the binary, and the mocap system to be on the same network. The desired network is robocup-x.

- Connect to the robot using the portable screen and keyboards available.

- Run

sudo ./robocupconfiguration - Change the network IP address to ensure that the three systems are on the same subnet.

- Change the network to

robocup-xand configure the network.

Recording a dataset

Navigate to

NatNet.yamland ensure the multicast_address is set to239.255.42.99. Ensuredump_packets: falseotherwise we will dump every packet onto the robot during recording (we don't want this).Navigate to

SensorFilter.yamland changeuse_ground_truth: true.Configure with

natnet, build the code and install onto the robot.Run the

natnetrole and view the console. If everything was successful, you should seeConnected to X.X.X.X (Motive 2.3.1.1) over NatNet 3.1.If you see

No ground truth data received, but use_ground_truth is true, ensure the previous step was completed correctly.You should also test this within NUsight. Move the robot around and verify it is moving sensibly given that it should be using the ground truth data.

We are now ready to record a ground truth dataset!

Navigate to

DataLogging.yamland addmessage.localisation.RobotPoseGroundTruth: true.If you want to record a dataset that compares ground truth to another module, you must set the messages to

truethat your module requires. You will also need changeuse_ground_true: falseto ensure we don't override our odometry messages with ground truth data.Navigate to the role you will be running, and add

input::NatNet,localisation::Mocapandsupport::logging::DataLogging.Configure your role, build and install on the robot.

Run the binary and extract the generated nbs file and it's

.idxthat appears in theloggingfolder usingscp.